OpenAI Simplifies Voice Assistant Development: 2024 Event Highlights

Table of Contents

The 2024 OpenAI event showcased groundbreaking advancements that significantly simplify voice assistant development. This article highlights the key takeaways, focusing on how these innovations empower developers to build more sophisticated and user-friendly voice interfaces with ease. We'll explore the streamlined processes, enhanced capabilities, and accessibility improvements unveiled at the event, making voice assistant development more accessible than ever before.

Streamlined Development Process with Pre-trained Models

OpenAI's pre-trained models drastically reduce the time and resources required for voice assistant development. Instead of building everything from scratch, developers can leverage these powerful tools to accelerate the prototyping and deployment phases.

- Reduced coding requirements: Pre-trained models handle much of the heavy lifting in natural language processing (NLP), significantly reducing the amount of custom code needed.

- Faster prototyping: Developers can quickly build and test voice assistant prototypes, iterating on designs and features much more efficiently.

- Access to powerful NLP capabilities: These models offer state-of-the-art NLP capabilities, including speech-to-text conversion, intent recognition, and natural language generation, all readily available.

- Cost-effectiveness: Utilizing pre-trained models reduces development costs associated with extensive coding, training data acquisition, and specialized expertise.

The event highlighted models like Whisper, demonstrating exceptional speech-to-text capabilities across numerous languages and accents. Whisper’s performance in noisy environments and its ability to handle various speaking styles were particularly impressive. These models seamlessly integrate with popular development platforms such as Python and Javascript, further simplifying the development workflow.

Enhanced Natural Language Understanding (NLU) Capabilities

OpenAI's advancements in NLU significantly improve the accuracy and context awareness of voice assistants. This translates to more natural and intuitive interactions for users.

- Improved speech-to-text conversion: Enhanced accuracy even in challenging acoustic conditions, leading to fewer misinterpretations.

- Better intent recognition: Voice assistants can now more accurately understand the user's intentions, even with complex or ambiguous queries.

- Handling of complex queries: The models can now handle multi-part questions and nuanced requests, providing more comprehensive and helpful responses.

- Multi-lingual support: OpenAI's models support a wider range of languages, making voice assistants accessible to a global audience.

Real-world applications showcasing these improvements include improved customer service chatbots, more accurate virtual assistants for scheduling appointments, and more effective voice-controlled smart home devices. Furthermore, exciting new features like emotion detection and sentiment analysis were showcased, opening possibilities for more empathetic and personalized interactions.

Improved Personalization and Customization Options

OpenAI's tools empower developers to create truly personalized voice assistant experiences. These tools allow for adaptive learning and user profile creation, leading to more tailored interactions.

- User profile creation: Developers can design systems to gather and utilize user data to create unique profiles, adapting the assistant’s behavior based on individual preferences.

- Adaptive learning capabilities: Voice assistants can learn from user interactions over time, refining their responses and becoming more effective.

- Customized voice responses: Developers can personalize the tone, style, and even the voice itself, enhancing the user's sense of connection with the assistant.

- Integration with user data: Integration with existing user data sources allows for seamless personalization across different platforms and applications.

However, it's crucial to address the ethical implications and data privacy considerations associated with personalization. OpenAI emphasizes responsible data handling and transparency in its guidelines. Examples of how personalization enhances user experience include voice assistants proactively suggesting relevant information based on user history and offering customized news briefings.

Accessibility Features and Inclusivity

OpenAI is committed to making voice assistants accessible to everyone. The 2024 event highlighted various features aimed at broadening inclusivity.

- Support for diverse languages and accents: Improved accuracy across a wide range of languages and accents ensures broader accessibility.

- Features for visually impaired users: OpenAI is actively developing features like improved screen reader compatibility and detailed audio descriptions to enhance usability.

- Customizable voice options: Users can select from a variety of voices and tones to match their preferences or needs.

Specific accessibility features showcased included improved speech synthesis for different languages and the introduction of new audio cues for visually impaired users. OpenAI also announced partnerships with accessibility organizations to further refine and expand its inclusivity initiatives.

OpenAI's Commitment to Responsible AI in Voice Assistant Development

OpenAI emphasizes responsible AI development, establishing clear guidelines and best practices for developers.

- Bias mitigation strategies: OpenAI actively works to identify and mitigate potential biases in its models, ensuring fair and equitable outcomes.

- Transparency in algorithms: OpenAI promotes transparency in the algorithms used in its models to facilitate understanding and accountability.

- Data privacy protection: Data privacy and security are paramount, with robust measures in place to protect user information.

- Ethical considerations: OpenAI addresses potential ethical dilemmas related to voice assistants, promoting responsible development and deployment practices.

OpenAI provides developers with resources and tools to identify and address potential biases in their own projects. This commitment to responsible AI is crucial for building trustworthy and beneficial voice assistant technologies.

Conclusion

The 2024 OpenAI event demonstrated a significant leap forward in voice assistant development, offering developers powerful tools and streamlined processes. From pre-trained models to enhanced NLU capabilities and improved accessibility, these advancements pave the way for a new generation of more intelligent, personalized, and inclusive voice assistants. OpenAI’s commitment to responsible AI ensures these advancements are used ethically and beneficially.

Call to Action: Ready to simplify your voice assistant development journey? Explore OpenAI's latest resources and tools today to build the next generation of voice-enabled experiences. Learn more about the advancements in voice assistant development and how OpenAI is shaping the future of voice technology.

Featured Posts

-

The Next Pope How Franciss Legacy Will Shape The Conclave

Apr 22, 2025

The Next Pope How Franciss Legacy Will Shape The Conclave

Apr 22, 2025 -

Ukraine Conflict Trumps Peace Plan And Kyivs Crucial Decision

Apr 22, 2025

Ukraine Conflict Trumps Peace Plan And Kyivs Crucial Decision

Apr 22, 2025 -

Closer Security Links Forged Between China And Indonesia

Apr 22, 2025

Closer Security Links Forged Between China And Indonesia

Apr 22, 2025 -

Harvard Faces 1 Billion Funding Cut Trump Administrations Anger Explodes

Apr 22, 2025

Harvard Faces 1 Billion Funding Cut Trump Administrations Anger Explodes

Apr 22, 2025 -

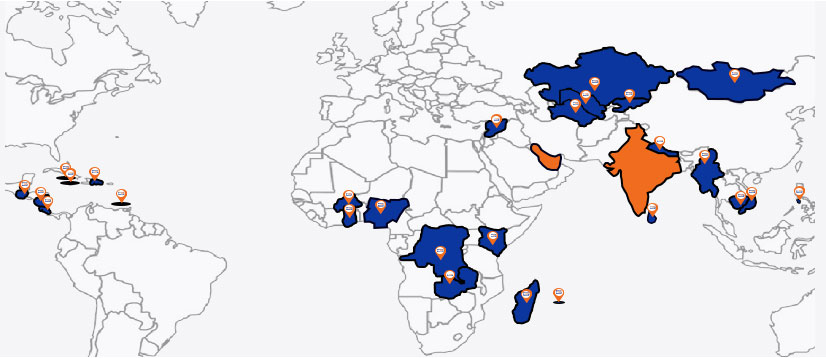

Mapping The Countrys Emerging Business Hotspots

Apr 22, 2025

Mapping The Countrys Emerging Business Hotspots

Apr 22, 2025